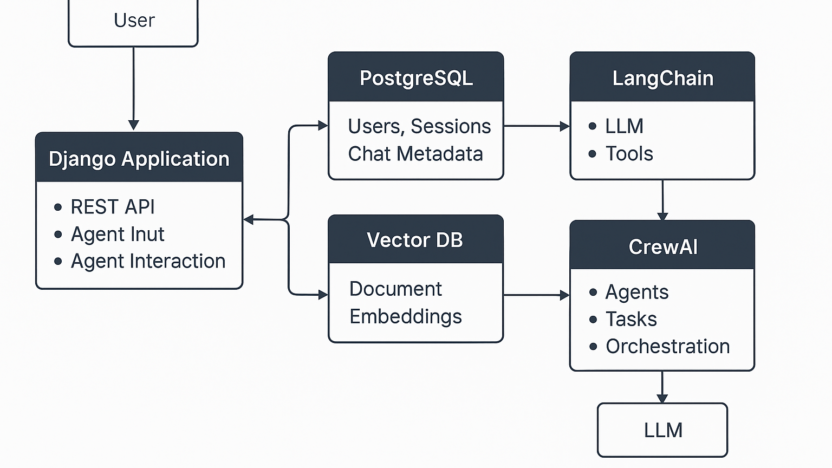

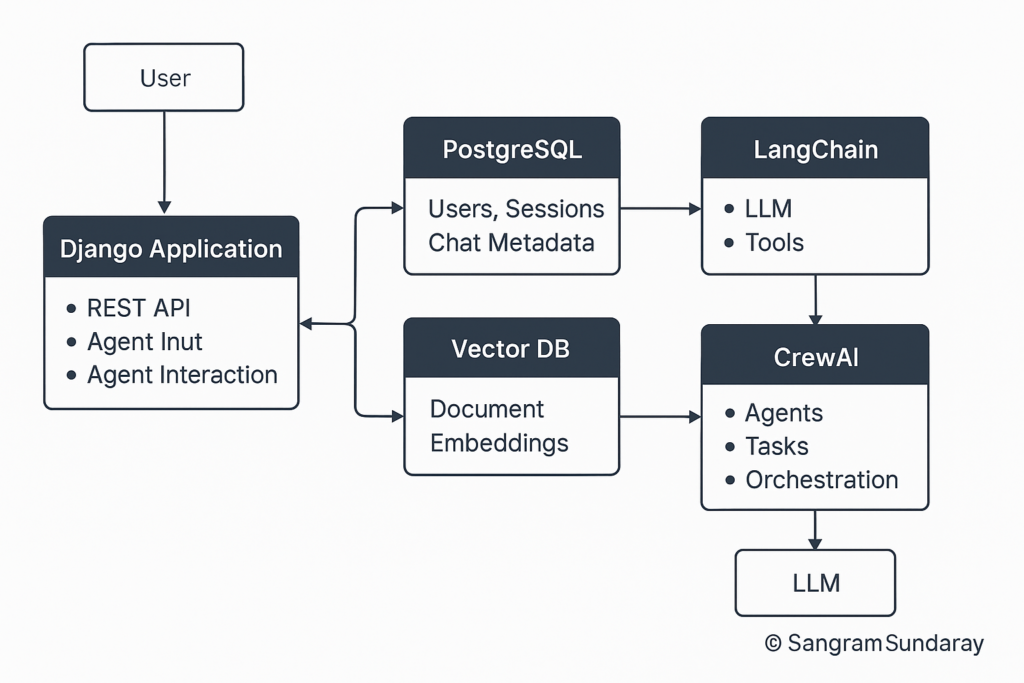

As AI agents become increasingly sophisticated, integrating multiple technologies is essential for building robust, intelligent applications. This blog post outlines the architecture of an AI Agent App utilizing:

- Python + Django: Backend and API layer

- CrewAI: Multi-agent orchestration

- LangChain: LLM interaction and toolchains

- PostgreSQL: Structured data storage

- Vector DB (e.g., Chroma or Weaviate): Semantic retrieval of documents (Crew AI Crash Course (Step by Step) · Alejandro AO)

This architecture is suitable for AI-driven assistants, advisors, or workflow automation tools.

🔧 Tech Stack Overview

| Component | Technology Used | Purpose |

|---|---|---|

| Web Framework | Django (Python) | REST API, views, authentication |

| Agent Runtime | CrewAI | Multi-agent task delegation and collaboration |

| LLM Interface | LangChain | Prompt chaining, memory, tools |

| Relational DB | PostgreSQL | Users, sessions, structured data |

| Vector DB | Chroma / Weaviate | Document search & retrieval using embeddings |

| LLM Backend | Gemini API / OpenAI | Natural language reasoning |

| Deployment | GCP Cloud Run / Docker | Scalable, serverless backend |

🧩 Component Breakdown

1. Django Backend (API Layer)

- RESTful API: Utilizes

django-rest-frameworkto interface with front-end or external clients. - Authentication & Permissions: Manages user authentication and permissions.

- Input Validation: Ensures data integrity and security.

- Database Integration: Connects with PostgreSQL and Vector DB.

- Endpoints: Exposes endpoints like

/ask,/chat,/upload_doc, etc.

2. PostgreSQL Database

Stores structured data such as:

- Users & sessions

- Audit logs and usage metrics

- Chat metadata and preferences (Build AI Web Apps with Django & LangChain | In Plain English – Medium, Building a RAG-based Query Resolution System with LangChain)

Example schema snippet:

CREATE TABLE user_sessions (

id UUID PRIMARY KEY,

user_id UUID REFERENCES auth_user(id),

start_time TIMESTAMP,

end_time TIMESTAMP

);

3. Vector Database (e.g., Chroma)

Used for semantic search. When users upload documents (PDFs, policy files), they’re split into chunks and embedded using LangChain:

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

vector_store = Chroma.from_documents(

docs, embedding=OpenAIEmbeddings()

)

These embeddings are stored and later retrieved during agent tasks for context-aware responses.

4. LangChain (LLM Middleware)

LangChain integrates various components:

- Tools: Loads tools (search, calculator, DB access).

- Prompt Chaining: Chains prompts and memory.

- Agent Behavior: Wraps agent behavior into callable functions. (My Journey Exploring AI Agents: LangChain, Crew AI, and … – GitHub, Build AI Web Apps with Django & LangChain | In Plain English – Medium)

Example:

agent_executor = initialize_agent(

tools=[vector_search_tool, calculator],

llm=ChatOpenAI(),

agent_type="conversational-react-description"

)

5. CrewAI (Multi-Agent Orchestration)

CrewAI defines agent roles and their collaboration:

crew = Crew(

agents=[researcher_agent, advisor_agent, validator_agent],

tasks=[task_user_question],

verbose=True

)

crew.run()

Each agent has:

- Role

- Backstory

- Tools & Access

- Task-Specific Prompts

This structure enables task decomposition and better reasoning.

🧭 Workflow: A Sample Interaction

Let’s walk through a sample user query:

User Query: “What are the Shariah-compliant Takaful policies available for health coverage in Malaysia?”

🔄 Request Flow

- User Input: Input arrives via Django REST API (

/askendpoint). - CrewAI Orchestration: The request triggers a task for the Researcher Agent to fetch relevant data from:

- Vector DB (semantic docs)

- External tools or search APIs (if configured)

- Context Assembly with LangChain: LangChain integrates search results, memory, and previous context for continuity.

- Validator Agent: Verifies Shariah compliance using rule-based filters or documents.

- Response to User: The final answer is returned via Django API, optionally saved in PostgreSQL. (CREWAI RAG LANGCHAIN QDRANT – GitHub)

☁️ Deployment Notes

Recommended deployment stack on GCP: (Ionio-io/langgraph-with-crewai – GitHub)

- Cloud Run: Containerized Django backend

- Cloud SQL: Managed PostgreSQL

- Cloud Storage: For file uploads

- Chroma: Self-hosted or Docker container

- Gemini/OpenAI API: For LLM calls

Basic Dockerfile for Django + CrewAI app:

FROM python:3.11-slim

WORKDIR /app

COPY . /app

RUN pip install -r requirements.txt

CMD ["gunicorn", "app.wsgi:application", "--bind", "0.0.0.0:8080"]

🔒 Security & Compliance

For applications in regulated industries (like Islamic finance), ensure:

- Input Sanitization: Prevent prompt injection.

- Agent Verification: Implement compliance logic.

- Logging and Audit Trails: Maintain transparency. (CrewAI, GitHub – crewAIInc/crewAI: Framework for orchestrating role-playing …)

🚀 Final Thoughts

This modular architecture enables developers to build intelligent, extensible AI systems combining structured data (PostgreSQL), unstructured knowledge (vector DB), and reasoning agents. CrewAI and LangChain together offer a solid foundation for complex AI workflows with memory, tools, and coordination.

Use Case Ideas:

- Legal assistants

- Enterprise knowledge management agents

References: (Build AI Agents with Azure Database for PostgreSQL and Azure AI Agent …)

- Building an Autonomous AI Agent with LangChain and PostgreSQL pgvector

- CrewAI GitHub Repository

- PostgreSQL as a Vector Database: A Pgvector Tutorial (Building an Autonomous AI Agent with LangChain and PostgreSQL pgvector, GitHub – crewAIInc/crewAI: Framework for orchestrating role-playing …, PostgreSQL as a Vector Database: A Pgvector Tutorial)