First, the Basics

Let’s decode the terms:

- AI = Artificial Intelligence (machines that can “think” or make decisions)

- ML = Machine Learning (a part of AI where machines learn from data)

- LLM = Large Language Models (like ChatGPT, Bard, Gemini — trained to understand and generate human language)

- Ops = Operations (everything needed to run, monitor, and maintain systems)

So:

- MLOps = Operations for machine learning

- AIOps = Operations for IT powered by AI

- LLMOps = Operations for large language models

MLOps – Think DevOps for Machine Learning

MLOps is about managing the lifecycle of machine learning models. It’s like DevOps, but for ML.

🔍 What it Includes:

- Data collection and cleaning

- Training models

- Testing models

- Version control for models

- Deployment (putting the model into production)

- Monitoring performance

- Updating models when needed

🧑💻 Who uses it?

- Data scientists

- ML engineers

- Software developers

✅ Goal:

To make sure ML models work well in real-world applications and keep improving over time.

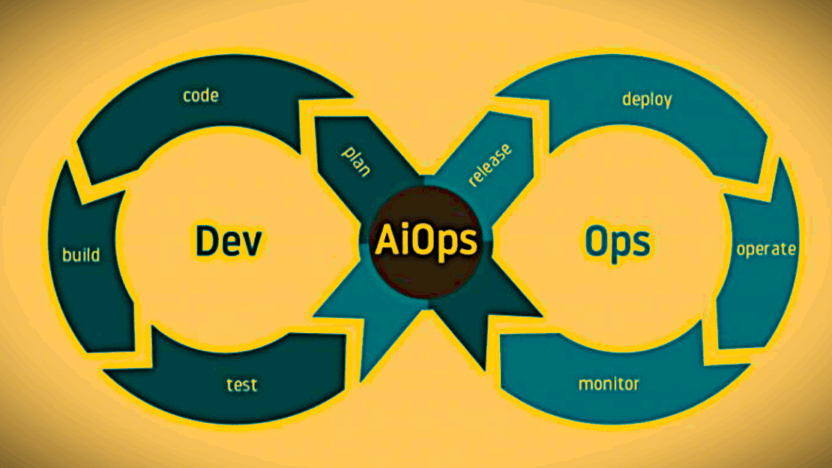

🤖 AIOps – AI for IT Operations

AIOps means using AI to automate and improve IT operations.

🔍 What it Includes:

- Monitoring servers and apps

- Detecting issues or outages

- Analyzing logs

- Predicting incidents before they happen

- Automating root-cause analysis and fixes

🧑💻 Who uses it?

- IT operations teams

- DevOps engineers

- System admins

✅ Goal:

To reduce downtime, quickly resolve problems, and keep systems running smoothly using AI.

🧾 LLMOps – Managing Large Language Models

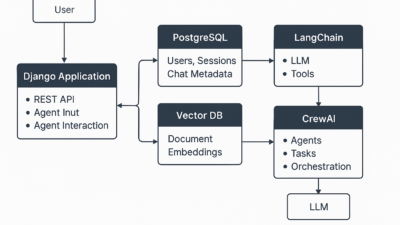

LLMOps is a newer field focused on building, deploying, and maintaining large language models like ChatGPT.

🔍 What it Includes:

- Fine-tuning or customizing models

- Managing prompts and context windows

- Handling data privacy and security

- Monitoring outputs for safety and bias

- Deploying models efficiently (e.g., on cloud or edge devices)

- Optimizing cost and performance

🧑💻 Who uses it?

- AI product teams

- LLM developers

- Prompt engineers

- Research scientists

✅ Goal:

To make LLMs work reliably in apps like chatbots, coding assistants, search engines, etc.

📊 Summary Table

| Feature | MLOps | AIOps | LLMOps |

|---|---|---|---|

| Focus Area | Machine learning model lifecycle | Automating IT operations | Managing large language models |

| Key Users | ML engineers, data scientists | IT Ops, DevOps teams | AI product teams, LLM developers |

| Main Goal | Deliver ML models into production | Keep IT systems stable with AI | Deploy and optimize language models |

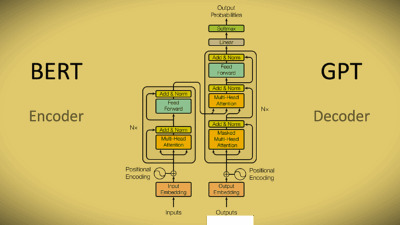

| Technologies | Python, ML frameworks, CI/CD tools | Monitoring tools + AI/ML | LLM APIs, vector DBs, prompt tools |

🧠 Quick Analogy

Think of it like a car company:

- MLOps is about designing and improving the engine (ML model) to run faster and better.

- AIOps is like using AI to monitor the factory machines, predict failures, and keep production smooth.

- LLMOps is about managing a specific kind of engine — a super-smart talking engine (LLM) — making sure it answers questions properly and doesn’t go off track.

🚀 Final Thoughts

Each “Ops” discipline serves a different purpose:

- MLOps helps build and ship ML models

- AIOps helps automate and optimize IT systems

- LLMOps helps manage and run large language models safely and effectively

As AI becomes a bigger part of our world, understanding these terms will help you stay ahead — whether you’re a techie or just curious.