This is a beginner-friendly article explaining how the Transformer model works in Large Language Models (LLMs), using the diagram above as a guide.

Imagine you’re teaching a smart robot how to read and write sentences. How does it understand the meaning of a sentence and predict what comes next? That’s where the Transformer model comes in — the core brain of LLMs like ChatGPT.

Let’s break it down step by step, in the simplest way possible.

📝 Step 1: Input — Words Come In

Let’s take a sentence:

“The animal crossed the”

These words are input into the model.

🔤 Step 2: Embeddings — Words Become Numbers

Computers don’t understand words directly. So first, each word is converted into numbers, called embeddings.

Think of embeddings like a secret code for each word that tells the computer what it means and how it relates to other words.

🧱 Step 3: Transformer — The Real Magic

This is the heart of it.

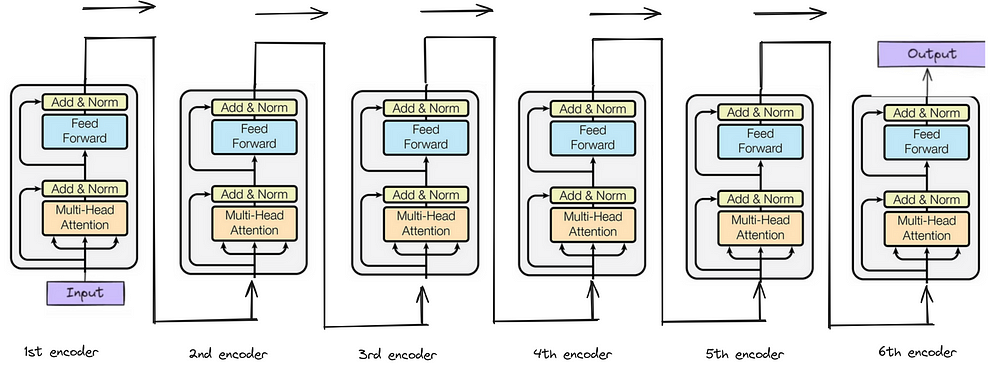

The Transformer has two parts:

- Encoder (used more in translation and understanding)

- Decoder (used for generating words)

In LLMs, we mainly use the decoder to predict the next word.

The decoder looks at all the words in the input and uses something called self-attention to understand:

- What words are important?

- How are they related?

- What should come next?

So, it might realize that in “The animal crossed the…”, something like “street” makes the most sense.

🔢 Step 4: Linear & Softmax — Guessing the Next Word

The Transformer sends its guess through a small calculator:

- Linear layer converts it to a list of possible words.

- Softmax layer gives a probability for each.

For example:

- “road” → 10%

- “street” → 60%

- “jungle” → 30%

It picks the most likely one: “street”

🗣️ Step 5: Output — Next Word is Given

Finally, the model says:

“The animal crossed the street.”

If you ask for more, the process continues — one word at a time, using everything it has seen before to predict what comes next.

🎯 Summary: What Makes Transformers Powerful?

- 🔍 Attention: They focus on the right words.

- 🔁 Context: They remember everything that came before.

- ⚡ Fast & Parallel: They process lots of words at once.

That’s how LLMs like ChatGPT understand and generate human-like text — word by word, using a Transformer model.