Using Django, LangChain, PostgreSQL, Vector DB, and GCP

In this post, we’ll break down a modern chatbot web application architecture using:

✅ Django

✅ LangChain

✅ PostgreSQL

✅ Vector Database (like Pinecone or Chroma)

✅ Google Cloud Platform (GCP)

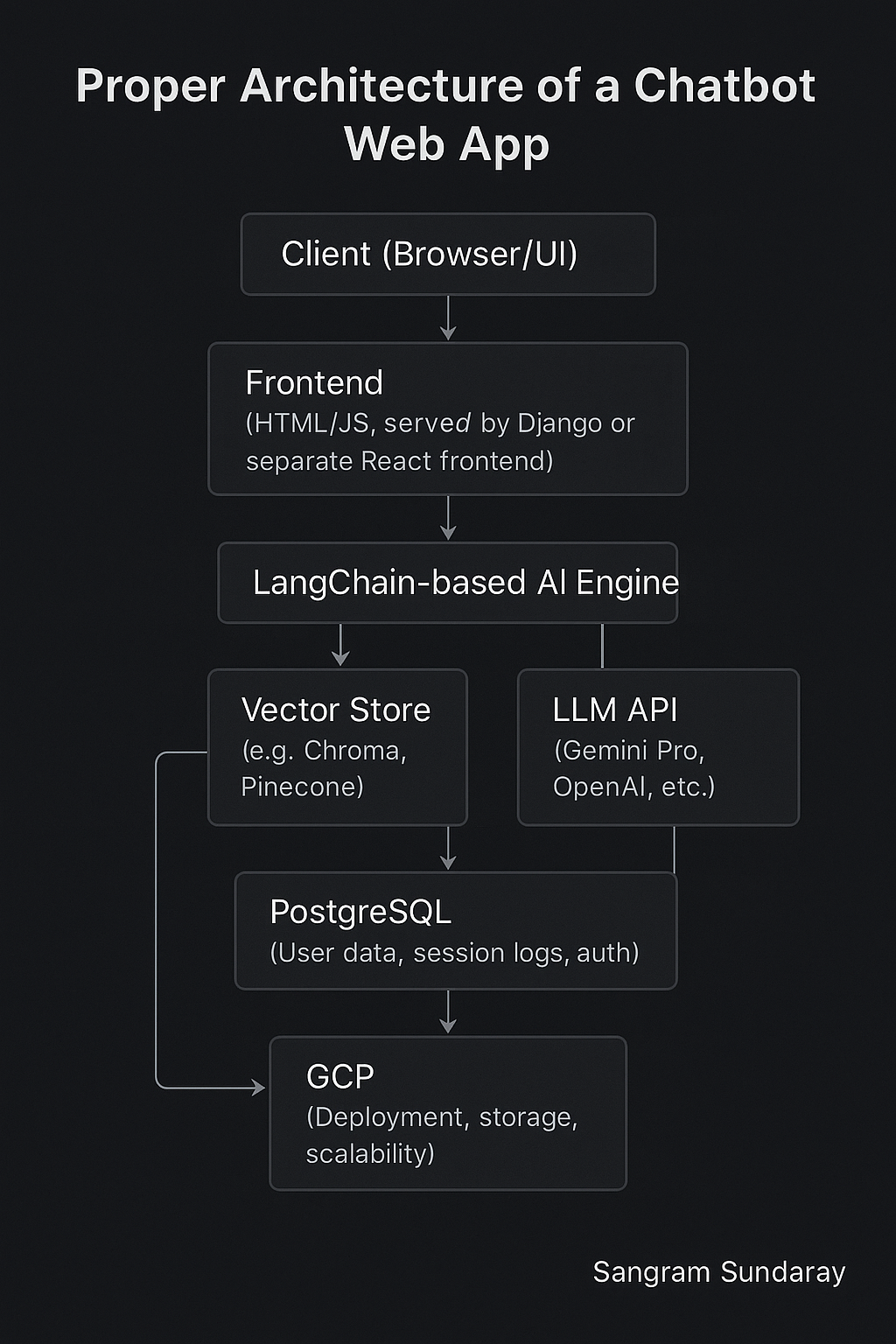

🧱 1. Architecture Overview

At a high level, here’s what the chatbot system looks like:

Client (Browser/UI)

|

Frontend (HTML/JS, served by Django or separate React frontend)

|

Django Backend (REST or WebSocket API)

|

LangChain-based AI Engine

├── Vector Store (e.g., Chroma, Pinecone)

└── LLM API (Gemini Pro, OpenAI, etc.)

|

PostgreSQL (User data, session logs, auth)

|

GCP (Deployment, storage, scalability)

🧠 2. LangChain-Powered Chat Engine

LangChain is the brain behind the scenes. It lets you:

- Orchestrate calls to LLMs (e.g., Gemini Pro, OpenAI)

- Perform context-aware querying with vector search

- Handle tools, memory, and agent flows

LangChain makes it easy to modularize your chatbot logic. For example:

from langchain.chains import RetrievalQA

from langchain.vectorstores import Chroma

from langchain.embeddings import GoogleGenerativeAIEmbeddings

from langchain.llms import ChatGoogleGenerativeAI

llm = ChatGoogleGenerativeAI(model="gemini-pro", temperature=0)

retriever = Chroma(persist_directory="vector_db").as_retriever()

qa_chain = RetrievalQA.from_chain_type(llm=llm, retriever=retriever)

🖥️ 3. Django – The Backend Framework

Django serves as the backbone of the application. It handles:

- User authentication (admin, customers, agents)

- API endpoints (

/chat,/history,/feedback) - Session management

- Admin UI to manage users, queries, logs, and documents

Structure your Django app into modules like:

core: common utilitieschat: chat session handlingapi: DRF-based endpoints for frontendvector_store: indexing and retrieval logic

💡 Tip: Use Django REST Framework (DRF) or Django Channels for WebSocket real-time chat.

🗃️ 4. PostgreSQL – For Structured Data

PostgreSQL is perfect for:

- User accounts and permissions

- Chat logs and history

- Feedback, ratings, intent tracking

- Admin audit logs

Tables to include:

users: authchat_sessions: session metadatachat_messages: logs per sessionvector_sources: indexed document metadata

🧠 5. Vector Database – Memory & Retrieval

AI chatbots need memory. That’s where vector databases come in.

Use Chroma (local) or Pinecone/Weaviate (cloud) to store vector embeddings of documents, FAQs, or previous chats.

- Embed text using Gemini or SentenceTransformers

- Store vectors with metadata (document type, tag, etc.)

- Retrieve semantically relevant chunks via similarity search

Vector store enables RAG (Retrieval-Augmented Generation) — a must-have for domain-specific bots.

☁️ 6. Google Cloud Platform – Deployment & Scale

Deploy your chatbot on GCP for reliability, security, and performance:

- Cloud Run: Scalable container deployment for Django + LangChain

- Cloud SQL: Managed PostgreSQL instance

- Cloud Storage: Store uploaded documents, logs, or audio

- Secret Manager: Manage LLM API keys and DB secrets

- VPC + IAM: Secure internal networking

Use Docker to package your app:

FROM python:3.11-slim

WORKDIR /app

COPY . .

RUN pip install -r requirements.txt

CMD ["gunicorn", "project.wsgi:application", "--bind", "0.0.0.0:8080"]

🧩 7. Putting It All Together

Your Django views or API endpoints will call LangChain chains, retrieve documents from the vector DB, and log everything to PostgreSQL.

Example flow:

- User query is posted to

/api/chat/ - Backend validates session/user

- LangChain engine:

- Embeds query

- Performs vector search

- Calls LLM with context

- Response is streamed back to frontend

- Logs are saved to DB

🎯 Conclusion

Building an AI chatbot requires thoughtful design. Using Django, LangChain, PostgreSQL, vector DBs, and GCP together creates a secure, scalable, and intelligent foundation.

Whether you’re building a personal assistant or an Islamic finance advisor, this architecture offers clarity, modularity, and production-grade readiness.

Ready to Build?

Spin up your Django app, integrate LangChain, connect to your vector DB, and deploy on GCP. You’re just a few steps away from launching an intelligent, scalable chatbot.